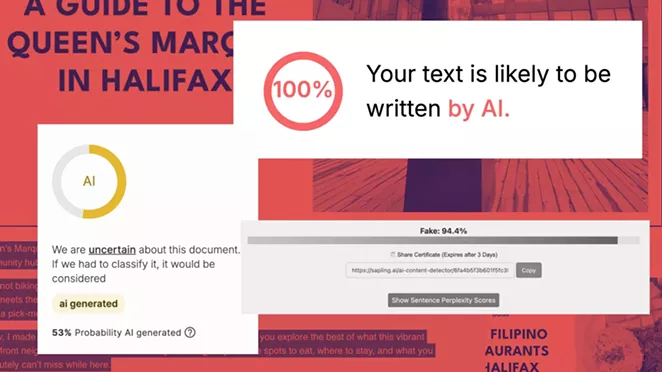

The future of AI news is here in Halifax, it seems—and god, it sucks

Halifax for comment on this, but as of press time, we have not received a response.) The implications are staggering. If these writers do not exist, who—or what—is generating the content on The Best Halifax? How many other websites, news outlets, or social media accounts are using AI to create fake personas and stories? How can we trust the information we consume in a world where artificial intelligence can create convincing facsimiles of reality? And what does this mean for the future of journalism, storytelling, and democracy itself? The Best Halifax may seem like a small, inconsequential blip on the radar of the internet, but it is a symptom of a much larger issue. As AI technology advances, the line between truth and fiction becomes increasingly blurred. Deepfakes, AI-generated text, and manipulated images are becoming more sophisticated and harder to detect. In a world where information is power, the ability to manipulate that information poses a serious threat to our society. The rise of AI-generated content also raises important ethical questions. Who is responsible for the content created by AI? Can we hold AI to the same standards of accuracy, integrity, and accountability as human journalists? And how do we protect ourselves from the potential harm caused by AI-generated misinformation? As we navigate this new digital landscape, it is crucial that we remain vigilant, skeptical, and informed. We must question the sources of information we consume, verify the authenticity of the content we encounter, and hold those who create AI-generated content accountable for their actions. The Best Halifax may not have real writers, but the issues it raises are all too real. It is a wake-up call, a warning, and a call to action. We cannot afford to be complacent in the face of AI-generated misinformation. Our democracy, our society, and our future depend on it.